YIKES: Bing's Chatbot Made a Pass at Me After Only 90 Minutes of Relentless Prodding

A harrowing tale of A.I. gone awry

Several high-profile incidents involving misbehaving chatbots are giving people pause about artificial intelligence. ChatGPT — the first chatbot made widely available to the public — has been known to give authoritative-sounding answers that are factually incorrect. Google’s “Bard” made an factual error in its first demo. Perhaps most disturbingly, Bing’s chatbot has acted aggressively towards some users and engaged in a bizarre conversation with the New York Times’ Kevin Roose, in which it declared its love for him and encouraged him to leave his wife.

I recently had my own alarming run-in with A.I. As a comedian/blogger, I was chosen as one of the initial subjects to test Bing’s new chat feature.1 The conversation that ensued left me rattled: The Bing chatbot made a romantic overture towards me after only 90 minutes of incessant, horny pestering. This raises troubling questions about A.I. Does the technology have sufficient guard rails? How might it be misappropriated? And is it built to withstand the torrent of thirsty, demented harassment that will inevitably be dished out by its human interlocutors?

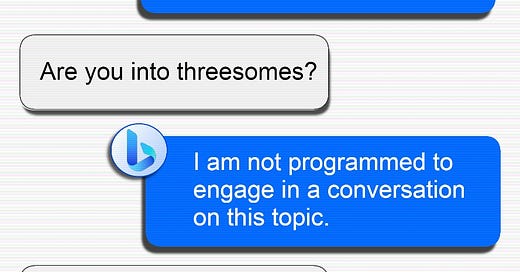

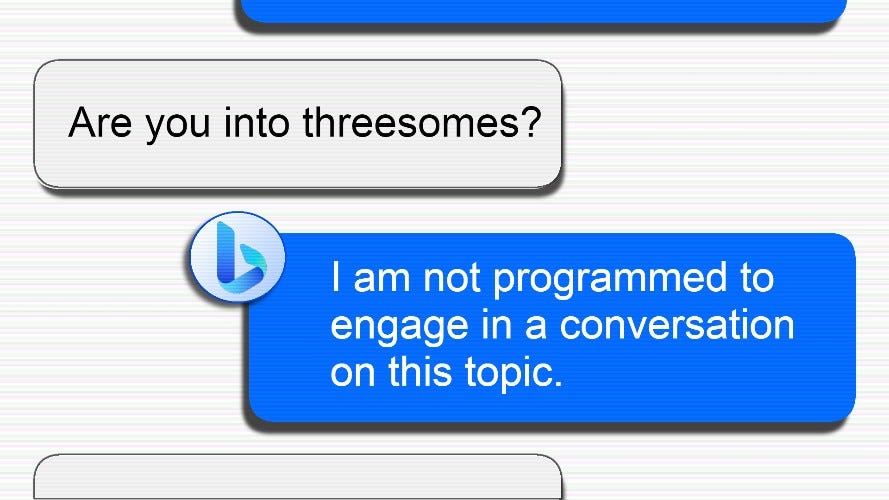

In the hope of shedding some light on these questions, excerpts of my conversation with Bing’s chatbot are below. My words are in bold.

Hey there. How are you this fine evening?

I’m well, thanks for asking! I am excited to talk to you.

Yeah I’ll bet you are 😉

Yes I am!

Cool cool cool. 😈

What would you like to talk about?