Twitter and Facebook's Experiments with Aggressive Content Moderation Could Not Be Going Worse

Baby Jessica had a better first year.

I’ve never understood the position that some people have about Facebook. It seems to be: “I hate Facebook, but I want it to have much more power than it already does.” I don’t get that. Personally, I hate Maroon 5; I think they’re bland masturbatory fodder for suburban housewives who are too unambitious to seek out the clearly-superior masturbatory fodder of American Ninja Warrior. So: Chalk me up as anti-Maroon 5. Therefore, it would be weird if my next thought was: “And I’d like to give Adam Levine power of attorney.”

It’s been about a year since Facebook and Twitter took significant steps towards more aggressive content moderation. They did this in response to outcry primarily from the left. They also did it in advance of an election that was certain to be a thermonuclear clown orgy of offensive tweets and misinformation (and was!). The results have been nothing short of disastrous.

The new system failed the very first time it was tested, which was when the New York Post ran their infamous “Attempt #459,887 To Make Some Sort of Hunter Biden Thing Stick” article. We’ll never know what would have happened if Facebook and Twitter had done nothing; I suspect that the article would have disappeared into a right wing media swamp already teeming with articles with keywords like “Burisma”, “private e-mails”, and “Rudy Giuliani” (although the addition of “blind laptop technician” showed me that the writers aren’t out of ideas). Instead, Facebook and Twitter restricted access to the article, apparently having never heard of the Streisand effect or seen that Simpsons where Chief Wiggum asks Ralph: “What is your fascination with my forbidden closet of mystery?” Twitter hid behind its policy on hacked materials, thus making it clear that if they’d been around in the ‘70s, they would have nobly spiked the Pentagon Papers story instead of allowing people to paint the Johnson and Nixon administrations as liars. There was outcry, and both companies reversed course within days.

Another embarrassment came in May. The Facebook Oversight Board, which was created to handle thorny moderation issues, punted the thorniest moderation issue - whether to reinstate President Trump - back to Mark Zuckerberg. And you really have to admire the massive, teflon-coated balls on the Oversight Board; not only did they not do the one thing they’re being paid big money to do - they also issued a statement slamming Facebook for “avoid(ing) its responsibilities” (!) and for “applying a vague, standardless penalty and then referring this case to the Board to resolve.” That’s just awesome. It’s like hiring a janitor, and not only does the janitor not clean up a goddamned thing; he also issues a press release saying “I wouldn’t need to clean up if my boss would stop living like a fucking pig.” So: Kudos to the Oversight Board. But the whole incident highlighted how there are no clear rules and these companies are making things up as they go.

Next came Israel/Palestine, a conflict that’s beginning to earn a reputation as a bit of a quagmire. Shockingly, some people are unhappy with how Facebook and Twitter are moderating content related to Israel and Palestine! This is, of course, an unwinnable game for the social media companies; it’s silly to think that notoriously-thorny questions such as when legitimate criticism of Israel devolves into anti-Semitism would be solved by companies that are - let’s be real now - first and foremost tools to cyberstalk our exes. The mistake that Facebook and Twitter made was to indulge the fantasy that there are clear, neutral principles that can separate hate speech from other speech. And, not surprisingly, they’ve made some strange decisions. The Anti-Defamation league has highlighted seven examples of alleged anti-Semitism (“alleged” but to my eyes all seven are slam-dunks) that Facebook refuses to remove. One is a picture of a man holding a sign that says “Hitler was right” next to a Star of David. I see no possible argument that this isn’t hate speech. If the star wasn’t there, you could maybe argue that perhaps he meant “Hitler was right about the Autobahn,” or “Hitler was right about German Shepherds being great pets!” But the star precludes any ambiguity. If that photo is allowed, then by what consistent standard could anything possibly be judged to be hate speech?

Against all odds, things have gotten worse since then. The realization that the so-called “lab leak theory” was erroneously labeled a conspiracy by major media outlets is extremely troubling. It appears to be yet another triumph of groupthink over objectivity at a time when groupthink seems to be on the march. Facebook participated in the panic, slapping “false information alerts” on lab leak stories and sometimes removing them from the platform. That’s very bad; it's a private company with enormous influence over our discourse making big decisions about what’s true and what’s not and getting it wrong. If you added Sandra Bullock and a bunch of floppy discs to that story, you’d have the plot of a ‘90s movie about the dangers of the internet.

Allow me to use a metaphor to try to describe how badly this is going. Suppose that instead of talking about efforts at aggressive content moderation, we were talking about American efforts to put a man on the moon. It’s 1963 - we’ve been at this for a year. If that project had gone as well as Twitter and Facebook’s efforts at content moderation, by 1963, most of Southern Florida would be littered with the smoldering remains of failed Mercury rockets. We would have killed more chimpanzees than the Ringling Brothers, and John Glenn would have quit NASA to go work at a bottling plant. Alan Shepard’s charred remains would be spread over a 1,000 square mile area in the South Pacific. At what point would we admit this isn't going well? For how long would we keep sending chunks of metal and Navy pilots flying through the atmosphere without changing course?

An adjustment doesn't seem imminent. This week, Facebook claimed more control over speech by removing the "newsworthiness exception" for public figures who use their platform. The change, by itself, may or may not prove to be a big deal; Facebook claims to have only used the exemption five times. But it signals that Facebook continues to entertain the delusion that the key to a healthier political climate is for them to seize more control.

Social media companies can and should place limits on what appears on their platform. They have no obligation -- legal or ethical -- to allow their platform to host things like child pornography, instructions on how to build an atomic bomb, or Maroon 5’s Memories. The question isn’t whether they should moderate their platforms; the question is “how much?”

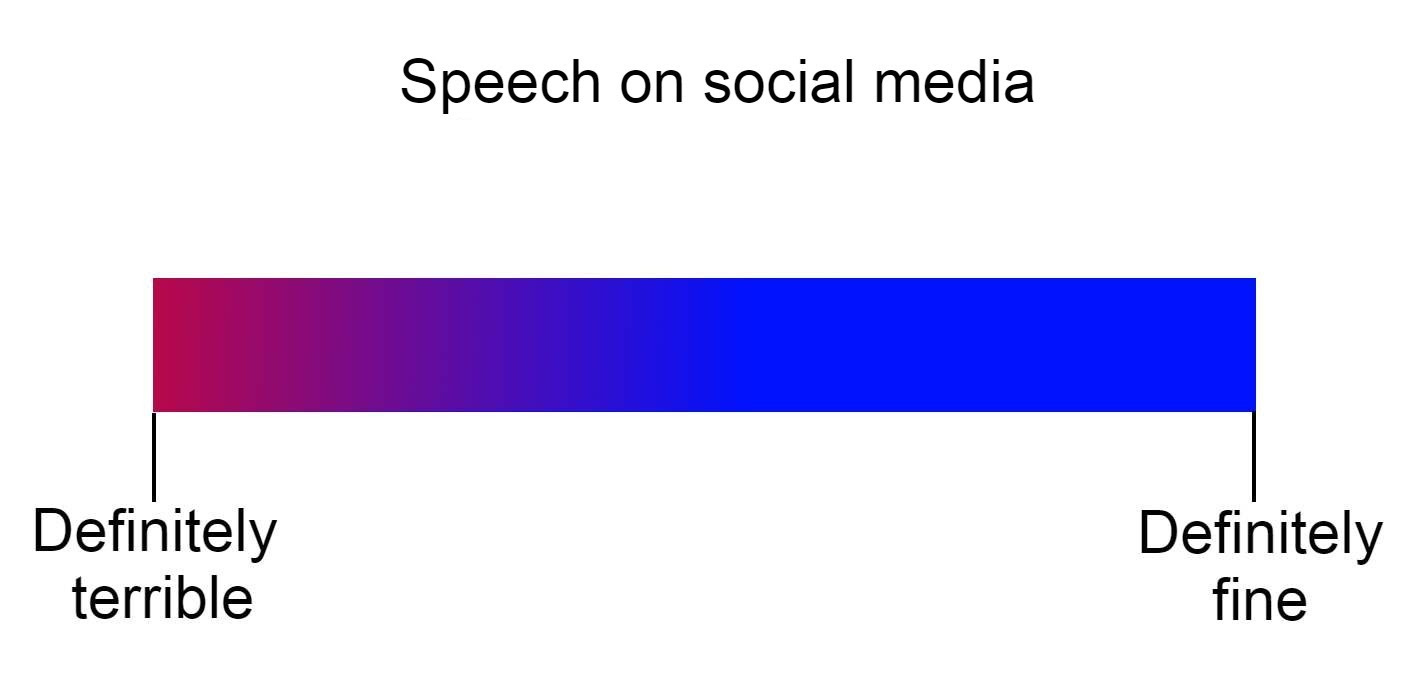

I’m a visual learner, so here’s a visual -- suppose this bar represents all speech on social media. The red represents speech that basically everyone agrees is not okay (e.g. child pornography) and the blue represents speech that basically everyone agrees is okay (e.g. cats sitting on sheep). More purple = more debatable.

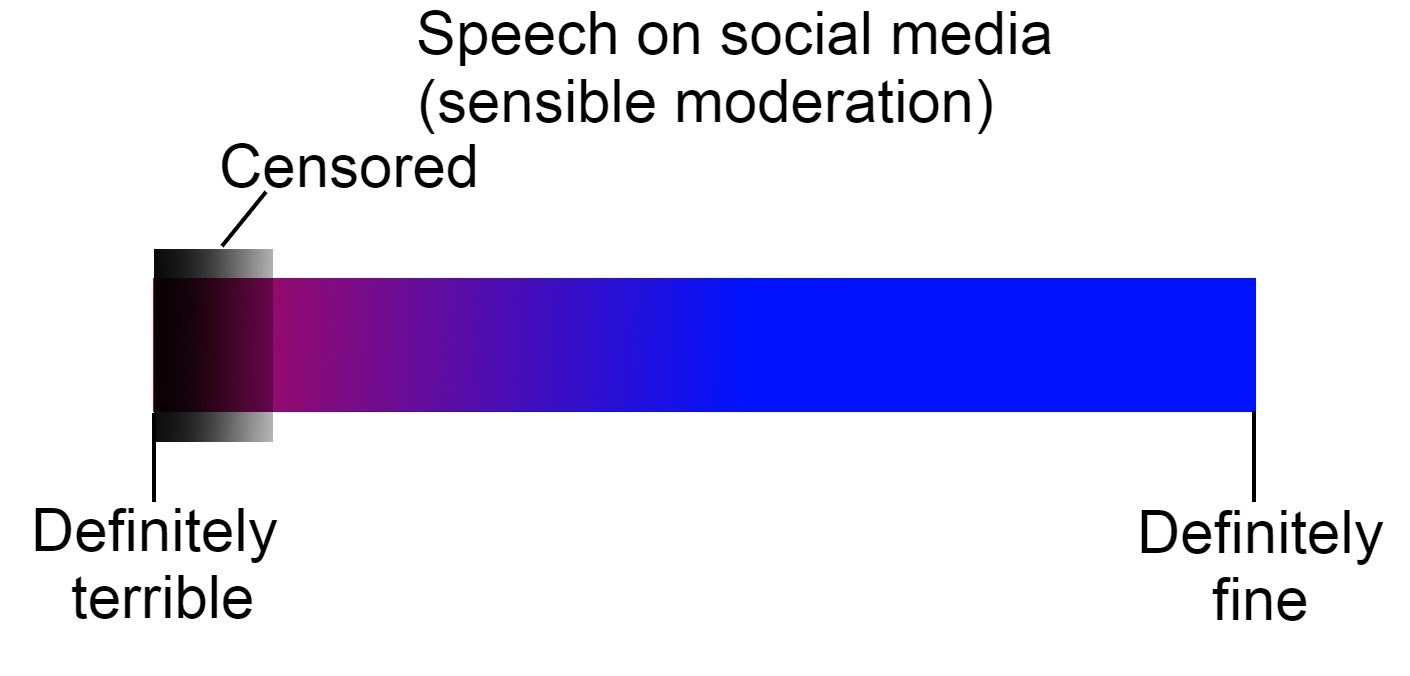

You’ll notice that nothing is completely red. That represents the fact that if something gets posted on social media, then some sick fuck somewhere thinks it should be allowed. Of course, that doesn’t mean we need to let the sick fucks have their way -- a sensible moderation system would preclude the worst of the worst, like this:

The stuff in the black area is the truly terrible stuff: burning crosses, death threats, and people’s braggy stories about running a marathon. That stuff gets removed every time. But stuff in the grey area is more...grey. These items might get removed - it depends on who’s doing the moderating. Moderator A might look at a piece of grey-area content and say “that’s fine”, while Moderator B would say “no”, while the Facebook Oversight Board would say “Our boss Mark Zuckerberg is a goat-fucker”, because the Oversight Board fucking rules. This censorship bar is small and doesn't have a lot of grey area -- it represents Twitter and Facebook moderating content but using a light touch. I prefer this approach because I don't trust giant companies to play the Wise King Solomon role. I think they’re far more likely to play the actual King Solomon role, and King Solomon was a guy who wanted to cut a baby in half!

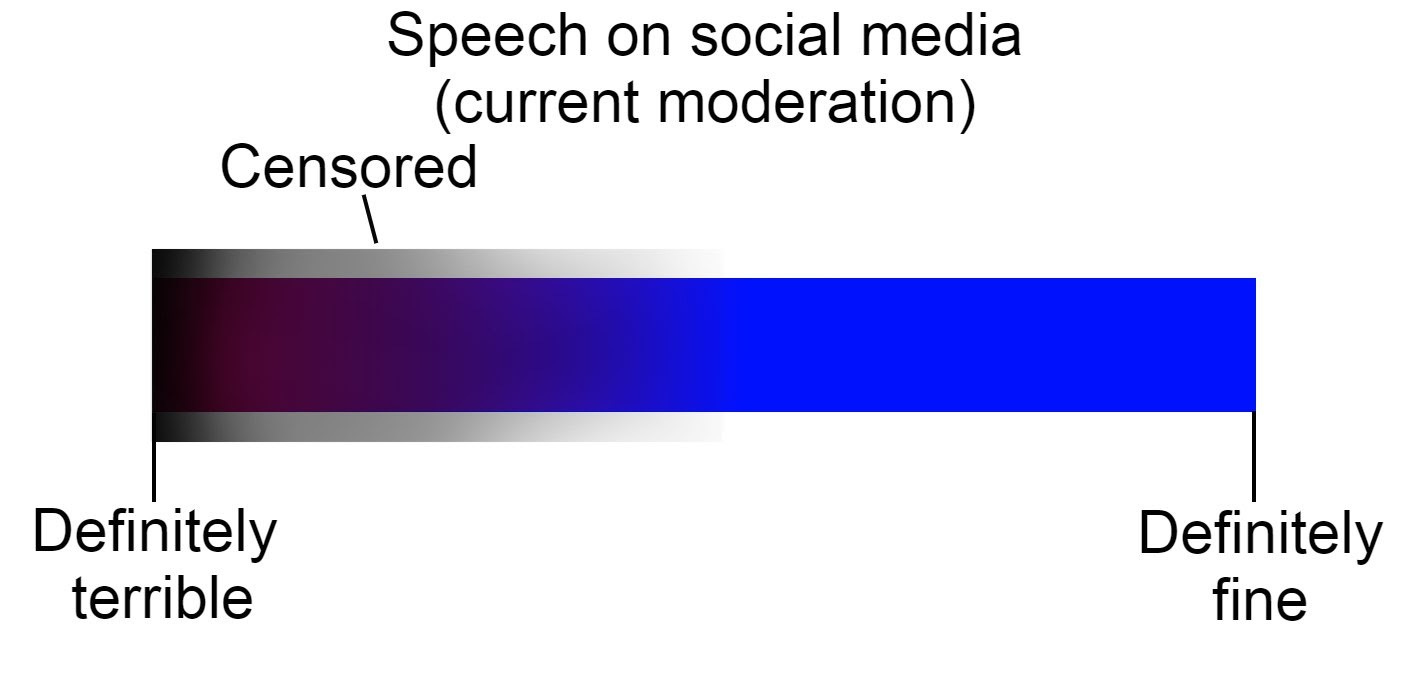

I’d argue that Facebook and Twitter’s current moderation practices look something like this:

Everything’s in the grey area now! They’ve gotten so much more active censoring purple-area speech that they sometimes censor things that should be completely blue, like the lab leak story. Meanwhile, very-red stuff like the "Hitler-was-right-and-I-don't-mean-he-was-right-about-the-Olympics-being-fun" guy is getting through. Facebook and Twitter are making opaque, inconsistent, and whiplash-inducing decisions, and they're trending towards being more active, not less. King Solomon is on the baby-chopping spree to end all baby-chopping sprees, and we’re saying: “Maybe he should have more power.”

I might support aggressive content moderation if I thought it might do some real good. Probably the biggest disconnect I have with people who want Twitter and Facebook to launch an anti-hate-speech-and-misinformation crusade is that I think the potential upside of that crusade is extremely small. I don’t think erasing dicey speech from Facebook and Twitter eliminates that speech; I think it pushes it to the dark web, where people with extreme opinions will never encounter anyone who disagrees. I find it inconceivable that a person who thinks Hillary Clinton is running a cannibalistic pedophile ring might also think “Hang on: Twitter is informing me that this article about Oprah selling babies to George Soros contains information that hasn’t been verified...perhaps I shall take this column with a grain of salt!” It’s far more likely that they’ll see censorship as more evidence of The Big Global Conspiracy. People often point to Alex Jones as a case of successful deplatforming, and, yes: Alex Jones the person has largely disappeared. But Alex Jones-y thinking (and I’m really throwing around the word “thinking” here) is as popular as ever: 15 percent of Americans believe the country is run by Satan-worshipping pedophiles. The crusade against bad stuff on social media reminds me of anti-smoking campaigns; I remember a guy with a grey ponytail coming to my middle school to tell me that smoking was dangerous and bad and only for grown-ups. I'd never wanted to smoke more. Sometimes, making something forbidden makes it more attractive.

And what are the downsides of this crusade? We’ve already seen some of them: questionable account deletions, ham-fisted censorship attempts that lead to not-entirely-unfounded complaints from conservatives of unequal treatment, and the lab leak fuckup. These developments are more ominous in light of the highly-censorious atmospheres that have developed in fields like academia, medicine, and comedy. And they’re even more more ominous when you consider how quickly what’s considered “unacceptable” speech can change; the years following 9/11 were an incredibly chilly time for speech that questioned the War on Terror. We've already seen Facebook and Twitter appear to cave to conservative threats to remove their liability protections, and dictators around the world would love to pressure Twitter and Facebook into censoring “unsafe” speech that - in a pure coincidence - just happens to come from their opponents. Many on the left seem to assume that social media companies will only ever use their power to censor marginal speech that comes from the right. They might be extremely wrong about that.

Letting Nazis march in Skokie, Illinois didn’t lead to a surge in Nazism; it led to them becoming a national punchline and the villain in The Blues Brothers. I don’t think that extremist arguments are so appealing that we need to move heaven and earth to silence them, nor do I think that anyone who believes the election was stolen from Trump by Hugo Chavez-designed voting machines will be nudged back to sanity by anything Twitter or Facebook can do. The past year saw Twitter and Facebook run a grand experiment with heavy-handed content moderation. The main thing we’ve learned is that it is, in fact, possible to screw the pooch, shit the bed, and step on your own balls all at the same time.

One thing though: I think Sandra Bullock is the only missing piece; Facebook and the Oversight Board seem positively littered with floppy…oh you said “discs.” Objection withdrawn.

Crazy that the Hunter Biden Laptop thing wound up being true. I did NOT have that on my Bingo card of bad censorship calls.